Non paywalled link https://archive.is/VcoE1

It basically boils down to making the browser do some cpu heavy calculations before allowing access. This is no problem for a single user, but for a bot farm this would increase the amount of compute power they need 100x or more.

Exactly. It’s called proof-of-work and was originally invented to reduce spam emails but was later used by Bitcoin to control its growth speed

It’s funby that older captchas could be viewed as proof of work algorithms now because image recognition is so good. (From using captchas.)

Interesting stance. I have bought many tens of thousand of captcha soves for legitimate reasons, and I have now completely lost faith in them

That’s actually a good idea. A very simple “click the frog” captcha might be solvable by an AI but it would work as a way to make it more expensive for crawlers without wasting compute resources (energy!) on the user or slowing down old devices to a crawl. So in some ways it could be a better alternative to Anubis.

it wasn’t made for bitcoin originally? didn’t know that!

Originally called hashcash: http://hashcash.org/

you know it’s old when it doesn’t have ssl

TIL

It inherently blocks a lot of the simpler bots by requiring JavaScript as well.

Thank you for the link. Good read

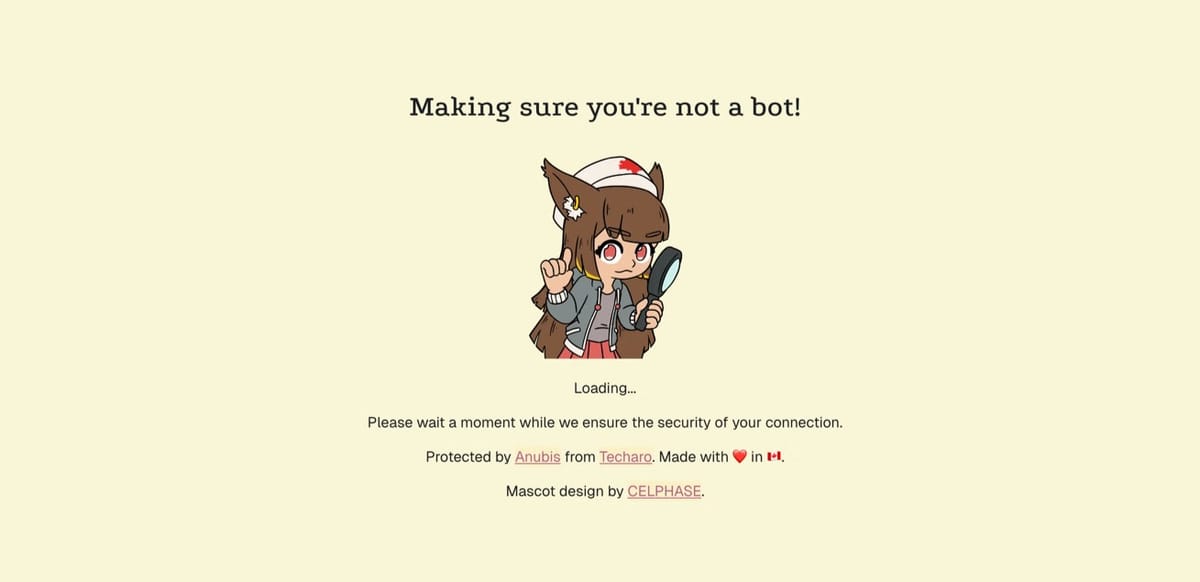

I know people love anime myself included, but this popping up on my work PC can be frustrating

Contact the administrator to ask them to change the landing page

Everytime I see anubis I get happy because I know the website has some quality information.

I’ve seen this pop up on websites a lot lately. Usually it takes a few seconds to load the website but there have been occasions where it seemed to hang as it was stuck on that screen for minutes and I ended up closing my browser tab because the website just wouldn’t load.

Is this a (known) issue or is it intended to be like this?

anubis is basically a bitcoin miner, with the difficulty turned way down (and obviously not resulting in any coins), so it’s inherently random. if it takes minutes it does seem like something is wrong though. maybe a network error?

adding to this, some sites set the difficulty way higher then others, nerdvpn’s invidious and redlib instances take about 5 seconds and some ~20k hashes, while privacyredirect’s inatances are almost instant with less then 50 hashes each time

So they make the internet worse for poor people? I could get through 20k in a second, but someone with just an old laptop would take a few minutes, no?

So they make the internet worse for poor people? I could get through 20k in a second, but someone with just an old laptop would take a few minutes, no?

i mean, kinda? you are absolutely right that someone with an old pc might need to wait a few extra seconds, but the speed is ultimately throttled by the browser

Just wait till they hit my homepage with a 200mb react frontend, 9 seperate tracking / analytics scripts and generic shopify scripts on it :P

Isn’t that just the way things work in general though? If you have a worse computer, everything is going to be slower, broadly speaking.

Well, it’s the scrapers that are causing the problem.

I have had a similar experience. Most sites with Anubis take only a few seconds to go through, but I ran into I think it was some small blog where it took at least 5 minutes. Like someone mentioned, it may have been how they set it up with number of hashes required. The site that took forever for me seemed to have some exorbitant number like 5k or 50k (I don’t recall exactly).

This is fantastic and I appreciate that it scales well on the server side.

Ai scraping is a scourge and I would love to know the collective amount of power wasted due to the necessity of countermeasures like this and add this to the total wasted by ai.

All this could be avoided by making submit photo id to login into a account.

That’s awful, it means I would get my photo id stolen hundreds of times per day, or there’s also thisfacedoesntexists… and won’t work. For many reasons. Not all websites require an account. And even those that do, when they ask for “personal verification” (like dating apps) have a hard time to implement just that. Most “serious” cases use human review of the photo and a video that has your face and you move in and out of an oval shape…

Also you must drink a verification can !

I don’t think this would help:

By photo ID, I don’t mean just any photo, I mean “photo id” cryptographically signed by the state, certificates checked, database pinged, identity validated, the whole enchilada

That would have the same effect as just taking the site offline…

No one is giving a random site their photo ID.

You’d be surprised, many humans have simply no backbone, common sense nor self respect so I think they very probably would still, in large numbers. Proof is facebook and palantir.

My archive’s server uses Anubis and after initial configuration it’s been pain-free. Also, I’m no longer getting multiple automated emails a day about how the server’s timing out. It’s great.

We went from about 3000 unique “pinky swear I’m not a bot” visitors per (iirc) half a day to 20 such visitors. Twenty is much more in-line with expectations.

I don’t understand how/why this got so popular out of nowhere… the same solution has already existed for years in the form of haproxy-protection and a couple others… but nobody seems to care about those.

Probably because the creator had a blog post that got shared around at a point in time where this exact problem was resonating with users.

It’s not always about being first but about marketing.

It’s not always about being first but about marketing.

And one has a cute catgirl mascot, the other a website that looks like a blockchain techbro startup.

I’m even willing to bet the amount of people that set up Anubis just to get the cute splash screen isn’t insignificant.Compare and contrast.

High-performance traffic management and next-gen security with multi-cloud management and observability. Built for the enterprise — open source at heart.

Sounds like some over priced, vacuous, do-everything solution. Looks and sounds like every other tech website. Looks like it is meant to appeal to the people who still say “cyber”. Looks and sounds like fauxpen source.

Weigh the soul of incoming HTTP requests to protect your website!

Cute. Adorable. Baby girl. Protect my website. Looks fun. Has one clear goal.

Probably a similar reason as to why we don’t hear about the other potential hundreds of competing products or solutions to the same problem (in general).

Luck.

It’s just not fair in our world.

Ooh can this work with Lemmy without affecting federation?

Yes, it would make lemmy as unsearchable as discord. Instead of unsearchable as pinterest.

That’s not true, search indexer bots should be allowed through from what I read here.

If you allow my searchxng search scraper then an AI scraper is indistinguishable.

If you mean, “google and duckduckgo are whitelisted” then lemmy will only be searchable there, those specific whitelisted hosts. And google search index is also an AI scraper bot.

Yes.

Source: I use it on my instance and federation works fine

Thanks. Anything special configuring it?

I keep my server config in a public git repo, but I don’t think you have to do anything really special to make it work with lemmy. Since I use Traefik I followed the guide for setting up Anubis with Traefik.

I don’t expect to run into issues as Anubis specifically looks for user-agent strings that appear like human users (i.e. they contain the word “Mozilla” as most graphical web browsers do) any request clearly coming from a bot that identifies itself is left alone, and lemmy identifies itself as “Lemmy/{version} +{hostname}” in requests.

To be honest, I need to ask my admin about that!

We don’t use anubis but we use iocaine (?), see /0 for the announcement post

Yeah, it’s already deployed on slrpnk.net. I see it momentarily every time I load the site.

As long as its not configured improperly. When forgejo devs added it it broke downloading images with Kubernetes for a moment. Basically would need to make sure user agent header for federation is allowed.

“Yes”, for any bits the user sees. The frontend UI can be behind Anubis without issues. The API, including both user and federation, cannot. We expect “bots” to use an API, so you can’t put human verification in front of it. These "bots* also include applications that aren’t aware of Anubis, or unable to pass it, like all third party Lemmy apps.

That does stop almost all generic AI scraping, though it does not prevent targeted abuse.

The API, including both user and federation, cannot.

This is theoretically an issue however in practice Anubis only weighs requests that appear to come from a browser: https://anubis.techaro.lol/docs/design/how-anubis-works

I just tested my instance with Jerboa and it seems to work just fine.

Just recently there was a guy on the NANOG List ranting about Anubis being the wrong approach and people should just cache properly then their servers would handle thousands of users and the bots wouldn’t matter. Anyone who puts git online has no-one to blame but themselves, e-commerce should just be made cacheable etc. Seemed a bit idealistic, a bit detached from the current reality.

Someone making an argument like that clearly does not understand the situation. Just 4 years ago, a robots.txt was enough to keep most bots away, and hosting personal git on the web required very little resources. With AI companies actively profiting off stealing everything, a robots.txt doesn’t mean anything. Now, even a relatively small git web host takes an insane amount of resources. I’d know - I host a Forgejo instance. Caching doesn’t matter, because diffs berween two random commits are likely unique. Ratelimiting doesn’t matter, they will use different IP (ranges) and user agents. It would also heavily impact actual users “because the site is busy”.

A proof-of-work solution like Anubis is the best we have currently. The least possible impact to end users, while keeping most (if not all) AI scrapers off the site.

This would not be a problem if one bot scraped once, and the result was then mirrored to all on Big Tech’s dime (cloudflare, tailscale) but since they are all competing now, they think their edge is going to be their own more better scraper setup and they won’t share.

Maybe there should just be a web to torrent bridge sovtge data is pushed out once by the server and tge swarm does the heavy lifting as a cache.

No, it’d still be a problem; every diff between commits is expensive to render to web, even if “only one company” is scraping it, “only one time”. Many of these applications are designed for humans, not scrapers.

If the rendering data for scraper was really the problem Then the solution is simple, just have downloadable dumps of the publicly available information That would be extremely efficient and cost fractions of pennies in monthly bandwidth Plus the data would be far more usable for whatever they are using it for.

The problem is trying to have freely available data, but for the host to maintain the ability to leverage this data later.

I don’t think we can have both of these.

Open source is also the AI scraper bots AND the internet itself, it is every character in the story.

<Stupidquestion>

What advantage does this software provide over simply banning bots via robots.txt?

</Stupidquestion>

Well, now that y’all put it that way, I think it was pretty naive from me to think that these companies, whose business model is basically theft, would honour a lousy robots.txt file…

Robots.txt expects that the client is respecting the rules, for instance, marking that they are a scraper.

AI scrapers don’t respect this trust, and thus robots.txt is meaningless.

TL;DR: You should have both due to the explicit breaking of the robots.txt contract by AI companies.

AI generally doesn’t obey robots.txt. That file is just notifying scrapers what they shouldn’t scrape, but relies on good faith of the scrapers. Many AI companies have explicitly chosen not no to comply with robots.txt, thus breaking the contract, so this is a system that causes those scrapers that are not willing to comply to get stuck in a black hole of junk and waste their time. This is a countermeasure, but not a solution. It’s just way less complex than other options that just block these connections, but then make you get pounded with retries. This way the scraper bot gets stuck for a while and doesn’t waste as many of your resources blocking them over and over again.

the scrapers ignore robots.txt. It doesn’t really ban them - it just asks them not to access things, but they are programmed by assholes.

The difference is:

- robots.txt is a promise without a door

- Anubis is a physical closed door, that opens up after some time

The problem is Ai doesn’t follow robots.txt,so Cloudflare are Anubis developed a solution.

I mean, you could have read the article before asking, it’s literally in there…

I’d like to use Anubis but the strange hentai character as a mascot is not too professional

I actually really like the developer’s rationale for why they use an anime character as the mascot.

The whole blog post is worth reading, but the TL;DR is this:

Of course, nothing is stopping you from forking the software to replace the art assets. Instead of doing that, I would rather you support the project and purchase a license for the commercial variant of Anubis named BotStopper. Doing this will make sure that the project is sustainable and that I don’t burn myself out to a crisp in the process of keeping small internet websites open to the public.

At some level, I use the presence of the Anubis mascot as a “shopping cart test”. If you either pay me for the unbranded version or leave the character intact, I’m going to take any bug reports more seriously. It’s a positive sign that you are willing to invest in the project’s success and help make sure that people developing vital infrastructure are not neglected.

This is a great compromise honestly. More OSS devs need to be paid for their work and if an anime character helps do that, I’m all for it.

Honestly, good. Getting sick of the “professional” world being so goddamn stiff and boring. Push back against sanitized corporate aesthetics.

hentai?

Anime whatever. God I sound like a boomer.

Lol and yet you knew the term hentai but not anime?

I was a teenager once haha

hentai character

anime != hentai

I smile whenever I encounter the Anubis character in the wild. She’s holding up the free software internet on her shoulders after all.

It’s just image files, you can remove them or replace the images with something more corporate. The author does state they’d prefer you didn’t change the pictures, but the license doesn’t require adhering to their personal request. I know at least 2 sites I’ve visited previously had Anubis running with a generic checkmark or X that replaced the mascot

Oh no why can’t the web be even more boring and professional

It’s just not my style ok is all I’m saying and it’s nothing I’d be able to get past all my superiors as a recommendation of software to use.

then have them pay for it.

Support, pay, and get it :)

Ah so it is possible to change it

i’m sure you could replace it if you really wanted to

Fantastic article! Makes me less afraid to host a website with this potential solution

I get that website admins are desperate for a solution, but Anubis is fundamentally flawed.

It is hostile to the user, because it is very slow on older hardware andere forces you to use javascript.

It is bad for the environment, because it wastes energy on useless computations similar to mining crypto. If more websites start using this, that really adds up.

But most importantly, it won’t work in the end. These scraping tech companies have much deeper pockets and can use specialized hardware that is much more efficient at solving these challenges than a normal web browser.

I agree. When I run into a page that demands I turn on Javascript for whatever purpose, I usually just leave. I wish there was some way to just not even see links to sites that require this.

I don’t like it either because my prefered way to use the web is either through the terminal or a very stripped down browser. I HATE tracking and JS

she’s working on a non cryptographic challenge so it taxes users’ CPUs less, and also thinking about a version that doesn’t require JavaScript

Sounds like the developer of Anubis is aware and working on these shortcomings.

Still, IMO these are minor short term issues compared to the scope of the AI problem it’s addressing.

To be clear, I am not minimizing the problems of scrapers. I am merely pointing out that this strategy of proof-of-work has nasty side effects and we need something better.

These issues are not short term. PoW means you are entering into an arms race against an adversary with bottomless pockets that inherently requires a ton of useless computations in the browser.

When it comes to moving towards something based on heuristics, which is what the developer was talking about there, that is much better. But that is basically what many others are already doing (like the “I am not a robot” checkmark) and fundamentally different from the PoW that I argue against.

Go do heuristics, not PoW.

Youre more than welcome to try and implement something better.

“You criticize society yet you participate in it. Curious.”

You can’t freely download and edit society. You can download and edit this piece of software here, because this is FOSS. You could download it now and change it, or improve it however you’d like. But, you can’t, because you’re just pretending to be concerned about issues that are made up. Or, if being generous from what I can read here, only you have encountered.

If you think thats comparable then you’re dumber than I thought.

That last paragraph is nothing but defeatism

On the contrary, I’m hoping for a solution that is better than this.

Do you disagree with any part of my assessment? How do you think Anubis will work long term?

Anubis long term actually costs them millions and billions more in energy to run browser and more code. Either way they have to add shit to the bots which costs all the companies money.

It takes like half a second on my Fairphone 3, and the CPU in this thing is absolute dogshit. I also doubt that the power consumption is particularly significant compared to the overhead of parsing, executing and JIT-compiling the 14MiB of JavaScript frameworks on the actual website.

It depends on the website’s setting. I have the same phone and there was one website where it took more than 20 seconds.

The power consumption is significant, because it needs to be. That is the entire point of this design. If it doesn’t take significant a significant number of CPU cycles, scrapers will just power through them. This may not be significant for an individual user, but it does add up when this reaches widespread adoption and everyone’s devices have to solve those challenges.

The usage of the phone’s CPU is usually around 1w, but could jump to 5-6w when boosting to solve a nasty challenge. At 20s per challenge, that’s 0.03 watt hours. You need to see a thousand of these challenges to use up 0.03 kwh

My last power bill was around 300 kwh or 10,000 more than what your phone would use on those thousand challenges. Or a million times more than what this 20s challenge would use.

It is basically instantaneous on my 12 year old Keppler GPU Linux Box. It is substantially less impactful on the environment than AI tar pits and other deterrents. The Cryptography happening is something almost all browsers from the last 10 years can do natively that Scrapers have to be individually programmed to do. Making it several orders of magnitude beyond impractical for every single corporate bot to be repurposed for. Only to then be rendered moot, because it’s an open-source project that someone will just update the cryptographic algorithm for. These posts contain links to articles, if you read them you might answer some of your own questions and have more to contribute to the conversation.

It is basically instantaneous on my 12 year old Keppler GPU Linux Box.

It depends on what the website admin sets, but I’ve had checks take more than 20 seconds on my reasonably modern phone. And as scrapers get more ruthless, that difficulty setting will have to go up.

The Cryptography happening is something almost all browsers from the last 10 years can do natively that Scrapers have to be individually programmed to do. Making it several orders of magnitude beyond impractical for every single corporate bot to be repurposed for.

At best these browsers are going to have some efficient CPU implementation. Scrapers can send these challenges off to dedicated GPU farms or even FPGAs, which are an order of magnitude faster and more efficient. This is also not complex, a team of engineers could set this up in a few days.

Only to then be rendered moot, because it’s an open-source project that someone will just update the cryptographic algorithm for.

There might be something in changing to a better, GPU resistant algorithm like argon2, but browsers don’t support those natively so you would rely on an even less efficient implementation in js or wasm. Quickly changing details of the algorithm in a game of whack-a-mole could work to an extent, but that would turn this into an arms race. And the scrapers can afford far more development time than the maintainers of Anubis.

These posts contain links to articles, if you read them you might answer some of your own questions and have more to contribute to the conversation.

This is very condescending. I would prefer if you would just engage with my arguments.

At best these browsers are going to have some efficient CPU implementation.

Means absolutely nothing in context to what I said, or any information contained in this article. Does not relate to anything I originally replied to.

Scrapers can send these challenges off to dedicated GPU farms or even FPGAs, which are an order of magnitude faster and more efficient.

Not what’s happening here. Be Serious.

I would prefer if you would just engage with my arguments.

I did, your arguments are bad and you’re being intellectually disingenuous.

This is very condescending.

Yeah, that’s the point. Very Astute

If you’re deliberately belittling me I won’t engage. Goodbye.

Scrapers can send these challenges off to dedicated GPU farms or even FPGAs, which are an order of magnitude faster and more efficient.

Lets assume for the sake of argument, an AI scraper company actually attempted this. They don’t, but lets assume it anyway.

The next Anubis release could include (for example), SHA256 instead of SHA1. This would be a simple, and basically transparent update for admins and end users. The AI company that invested into offloading the PoW to somewhere more efficient now has to spend significantly more resources changing their implementation than what it took for the devs and users of Anubis.

Yes, it technically remains a game of “cat and mouse”, but heavily stacked against the cat. One step for Anubis is 2000 steps for a company reimplementing its client in more efficient hardware. Most of the Anubis changes can even be done without impacting the end users at all. That’s a game AI companies aren’t willing to play, because they’ve basically already lost. It doesn’t really matter how “efficient” the implementation is, if it can be rendered unusable by a small Anubis update.

How will Anubis attack if browsers start acting like manual scrapers used by AI companies to collect information?

Because OpenAI is planning to release an AI-powered browser, what happens if it ends up using it as another way to collect information?

Blocking all Chromium browsers, I don’t think, is a good idea.

A javascriptless check was released recently I just read about it. Uses some refresh HTML tag and a delay. Its not default though since its new.

The source I assume: challenges/metarefresh.

I had seen that prompt, but never searched about it. I found it a little annoying, mostly because I didn’t know what it was for, but now I won’t mind. I hope more solutions are developed :D